Large computers were created by people in the middle of the XX century. Processors, as their main elements, have been known for a long time. Why did the first Intel processor go down in history, why was it remarkable? The answers to these questions are provided by the Boosty Labs team, which specializes in devops consulting services.

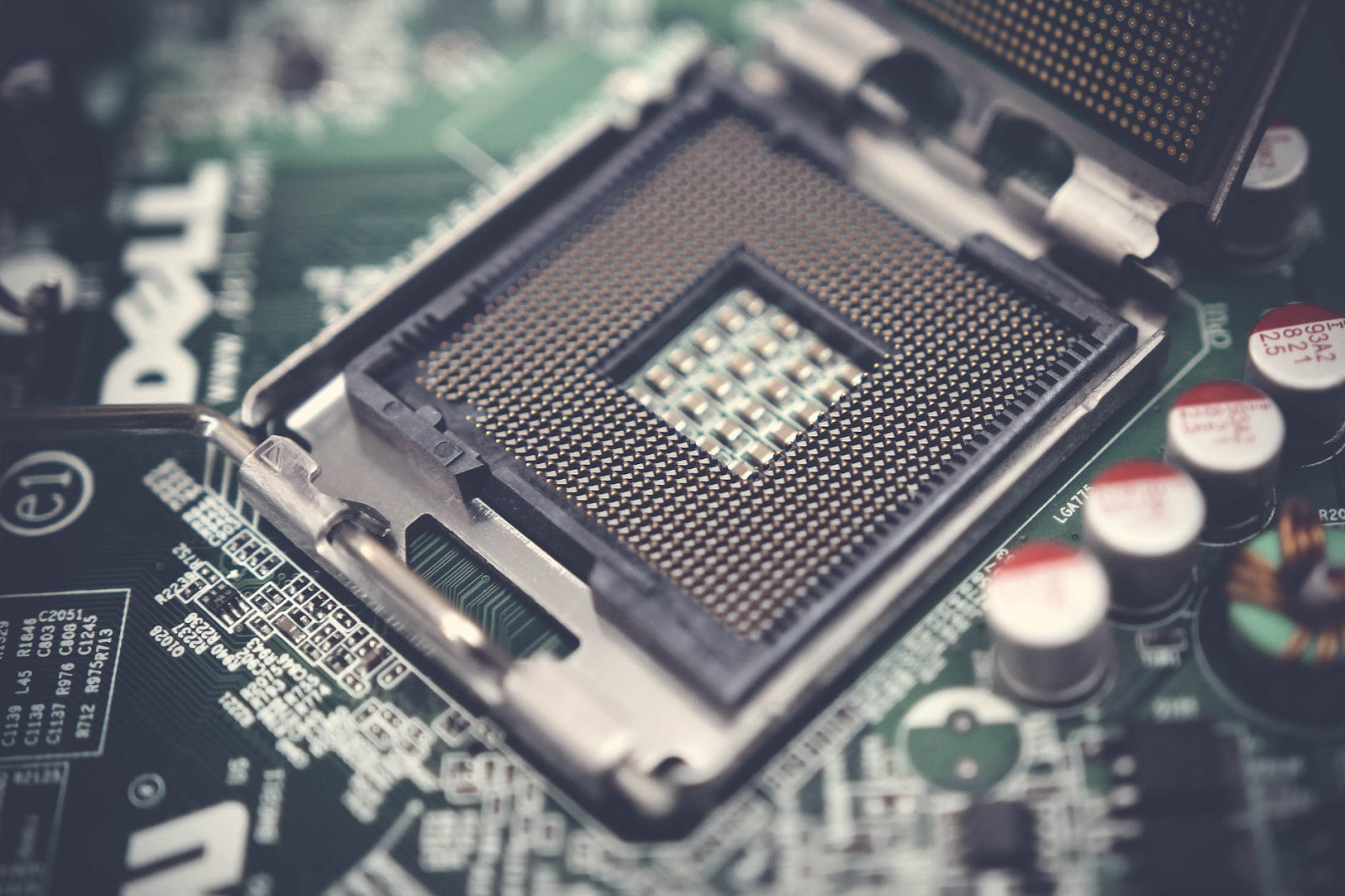

Since the time of Charles Babbage (XIX century), the processor or “computer” has been a large mechanical, and later (XX century) electronic device for processing information. Those were really large electronic systems, consisting of thousands and thousands of individual radio-electronic elements. All the elements were assembled together in large cabinets (racks), which were placed in special huge machine rooms.

Intel’s birth

Scientists and engineers strove for microminiaturization, for the embodiment of large electronic circuits in a single microcircuit. However, for the processor to work, a great many radio elements were needed. This did not allow implementing the ideas of reducing the size of processors using the technologies of that time.

Time moved forward, steadily bringing the victory of microminiaturization in electronics closer. Intel was originally called NM Electronics. It began work on July 18, 1968. The future corporation was founded by Gordon Moore and engineer Robert Noyce. Both previously worked for Fairchild.

The first Intel processor

After some time, a new co-founder, engineer Andrew Grove, entered the staff.The name of NM Electronics was changed to Intel. Engineers teamed up for a specific goal – to make memory based on semiconductors as practical and affordable as possible for ordinary users.

At that time, memory of this type was more than a hundred times more expensive than memory on magnetic media. The cost reduction happened later due to the gradual development of microminiaturization technologies. Many companies contributed to the development of these technologies, and Intel was one of the most notable at that time.

By 1970, the young company was already quite a successful supplier of memory chips, the first to start selling modules over 1 kilobyte. The module was very popular, and the company already had more than 100 specialists.

At that time it was a real breakthrough. It is difficult to imagine now the scale of such an achievement. Indeed, in our time, such microcircuits already have completely different volumes, calculated in gigabytes and even terabytes.

Chips for calculators

Representatives of Busicom (Japan) drew attention to the success of Intel. They decided to commission the development of chips for a popular family of programmable calculators.

Programmable calculators had a number of memory cells for temporary storage of numbers. They were supplied with a processor for executing programs for processing stored numbers. That is, programmable calculators were the first step towards the creation of future computers, but they were not yet real computers.

At that time, microcircuits were created for a specific device, which did not allow them to be used in other developments.In other words, Busicom didn’t risk anything because they only ordered for their calculators. And other competitors had to make or order such microcircuits on their own.

Universal microcircuit: from idea to realization

Initially, Intel was tasked with developing at least 12 chips with a unique architecture and specific functionality. Apparently, the Japanese needed to make at least 12 different models of their programmable calculators.

However, Ted Hoff (Intel engineer) suggested going down a completely different path. His idea was to develop one universal microcircuit, which is controlled by a program from a semiconductor memory. Four modules were to be used in such a chip: a 4004 processor, an I/O controller, RAM (random access memory) and ROM (read only memory).

In fact, Tad suggested making one single chip, not 12 different chips. This single chip could be programmed in any number of different ways. And thus receive on its basis not only 12 devices ordered by the Japanese, but in general any number of different microcircuits for solving an innumerable number of tasks.

The development for the Japanese company has become, thanks to the original solution of Intel, a universal development for various devices in which chips can and should be used to process information.

In April 1970, the company hired engineer Frederico Faggin. He had to design the 4004 control chip in accordance with Hoff’s ideas. The first working samples were received in January 1971. All stages of development were completed in March. Industrial production started already in June 1971.

So the 4004 processor became, in fact, the first working universal “computer” that could be programmed to solve a wide variety of problems. Technically, this processor was implemented as a single chip. The microcircuit is the only one, and its functionality, its actions now depended on how this microcircuit would be programmed.

Later, much more powerful processors will be created, for example, the very popular Intel 8080 and many others. And the Intel 4004 microprocessor was the very first among subsequent, very successful developments.

Intel 4004 microprocessor rights

Initially, the customer company Busicom had the rights to the new chip. Faggin understood that the new development would be widely used due to its versatility and the desire of developers to micro miniaturized computers.

As a result, he was able to convince the management of Intel to acquire the rights to a new chip. Busicom was in significant financial difficulty at the time and agreed to sell the rights for $60,000. So Intel became the owner of its unique development, ahead of its time and possible competitors.

Key Features and Applications of the Intel 4004

In November 1971, the 4004 processor was announced, which was used in the MCS-4 microcomputer. The four-bit chip consisted of 2300 transistors and operated at a frequency of only 93 kHz (kilohertz).

Modern processors are 64-bit microcircuits with GHz speeds that have already become familiar. But those 4 discharges and about a hundred kilohertz were a huge success for microelectronics. After all, it was the first commercial chip available to everyone.

Such a microcircuit, made on a single semiconductor crystal, 3×4 mm in size, in its functions completely replaced the main computers known at that time. Electronic computers (computers) of that time were located in huge halls and were supplied with industrial cooling and air conditioning systems. And here, instead of all this expensive whopper, there is one tiny microcircuit in a standard package, like many other microcircuits of that time, and the price is only about $200.

Initially, the 4004 processor was developed as the main computing element in calculators. Later, it was widely used in other devices. For example, in medicine for blood tests, for managing a network of traffic lights, and for many other things.

There is also a beautiful legend that Intel 4004 was used in the Pioneer 10 research rocket. Alas, this is just a legend that does not detract from the true significance of the development and release of the first 4004 microprocessor.

Conclusions

No one then could have imagined how important that very first 4004 processor turned out to be. A little later, processors became the main components of numerous devices and systems, including office and household appliances.

Processors are part of personal computers, laptops, tablets, smartphones and other devices, which now habitually accompanies us in our modern life, saturated with information.